Planning the Anzo and AKS Network Architecture

This topic describes the network architecture that supports the Anzo and AKS integration.

When you deploy the K8s infrastructure, Cambridge Semantics strongly recommends that you create the AKS cluster in the same Virtual Network as Anzo. If you create the AKS cluster in a new Virtual Network, you must configure the new network to be routable from the Anzo Virtual Network.

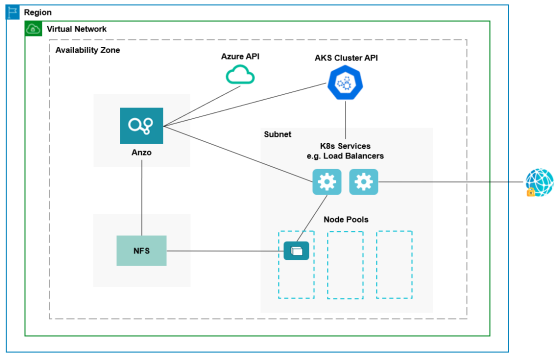

The diagram below shows the typical network components that are employed when an AKS cluster is integrated with Anzo. Most of the network resources shown in the diagram are automatically deployed (and the appropriate routing is configured) according to the values that you supply in the cluster and node group .conf files in the az package on the workstation.

In the diagram, there are two components that you deploy before configuring and creating the K8s resources:

- Anzo: Since the Anzo server is typically deployed before the K8s components, you specify the Anzo network when creating the AKS cluster, ensuring that Anzo and all of the AKS cluster components are in the same network and can talk to each other. Also, make sure that Anzo has access to the Azure and AKS APIs.

- NFS: You are required to create a network file system (NFS). However, Anzo automatically mounts the NFS to the nodes when AnzoGraph, Anzo Unstructured, Spark, and Elasticsearch pods are deployed so that all of the applications can share files. See Deploying the Shared File System for more information. The NFS does not need to have its own subnet but it can.

The rest of the components in the diagram are automatically provisioned, depending on your specifications, when the AKS cluster and node pools are created. The az scripts can be used to create a subnet for the K8s services and node pools and configure the routing so that Anzo can communicate with the K8s services and the services can talk to the pods that are deployed in the node pools. In addition, a Standard Load Balancer can be used to provide outbound internet access, such as for pulling container images from the Cambridge Semantics repository.

When considering the network requirements of your organization and planning how to integrate the new K8s infrastructure in accordance with those requirements, it may help to consider the following types of use cases. Cambridge Semantics supplies sample cluster configuration files in the az/sample_use_cases directory that are tailored for each of these use cases:

- Deploy a private AKS cluster with Azure Managed Identity

In this use case, the AKS cluster is deployed as a private cluster with no public access, and the Azure Managed Identity service is enabled for identity and authorization management. Using Azure Managed Identity is the recommended method to choose for AKS access control.

- Deploy a public AKS cluster with a new Service Principal

In this use case, the AKS cluster is deployed as a public cluster, and a Service Principal is created for managing access control. You are responsible for maintaining the Service Principal to keep the cluster functional.

- Deploy a public AKS cluster with an existing new Service Principal

This use case is similar to the use case described above but uses an existing Service Principal instead of creating a new one.

- Deploy a private AKS cluster with a user-managed Azure Active Directory server

In this use case, the AKS cluster is deployed as a private cluster and a user-managed Azure Active Directory (AAD) server is used for identity and authorization management. In this case, you supply the AAD client and server applications and the AAD tenant.

- Deploy a private AKS cluster with an Azure-managed AAD server

In this use case, the AKS cluster is deployed as a private cluster and an Azure-managed AAD server is used for identity and authorization management. In this case, the AKS resource manager manages the AAD client and server applications.

- Deploy an AKS cluster and access a private Azure Container Registry

In this use case, an AKS cluster is deployed and accesses images that are maintained in a private Azure Container Registry.

- Deploy an AKS cluster that Auto Scales on Demand

In this use case, an AKS cluster is deployed and the Cluster Autoscaler service is enabled. The Cluster Autoscaler automatically adds nodes to the node pool when demand increases and deprovisions nodes when demand decreases.

- Deploy an AKS with the Monitoring Addon

In this use case, an AKS cluster is deployed and the Log Analytics monitoring service is enabled.

- Deploy an AKS cluster with RBAC enabled

In this use case, an AKS cluster is deployed and Role-Based Access Control (RBAC) is enabled. RBAC manages Kubernetes user identities and credentials. RBAC can be enabled in conjunction with other authorization modes, such as Azure Managed Identity or AAD.

- Deploy an AKS cluster using existing resources

In this use case, an AKS cluster is deployed without creating new network components. The cluster is deployed into an existing Virtual Network and uses existing resource groups and subnetworks.

- Deploy an AKS cluster with Proximity Placement Groups

In this case, an AKS cluster is deployed with specified Proximity Placement Groups to ensure that compute resources are deployed physically close to each other to reduce latency.

For a summary of the files in the az directory, see Download the Cluster Creation Scripts and Configuration Files. Specifics about the parameters in the sample files are included in Creating the AKS Cluster.

To get started on creating the AKS infrastructure, see Creating and Assigning IAM Roles for instructions on creating the IAM roles that are needed for assigning permissions to create and use the AKS cluster.