Re-Ingesting an Updated Data Source

Follow the instructions below to re-ingest the data for a data source whose schema has been updated. The procedure below focuses on configuring the workflow to reuse the existing model and update the mappings and ETL jobs for the existing pipeline. For instructions on ingesting a new data source, see Ingesting a New Data Source.

If the source data is updated but the schema does not change, or if the model or mappings are modified and the schema is not affected, you do not need to re-ingest the source using the Ingest workflow. You can simply republish the pipeline or the affected jobs in the pipeline. See Publishing a Pipeline or Subset of Jobs for more information.

For information about updating a CSV Data Source if a file changes, see How do I update Anzo if a file in my CSV data source changes?

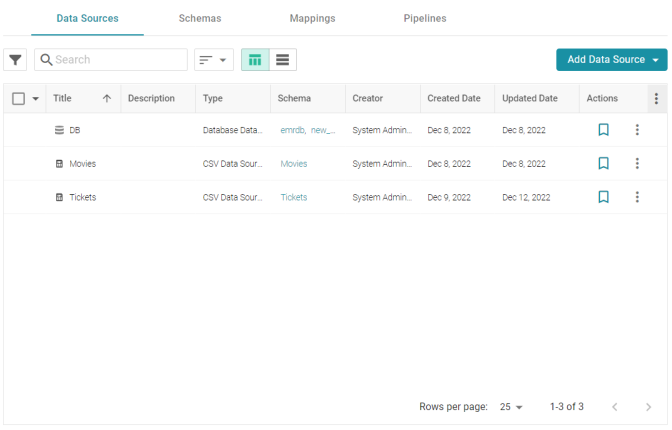

- In the Anzo application, expand the Onboard menu and click Structured Data. Anzo displays the Data Sources screen, which lists any existing sources. For example:

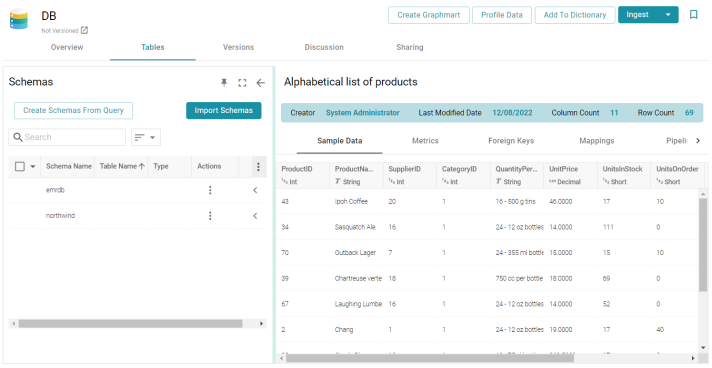

- On the Data Sources screen, click the name of the data source to re-ingest. Anzo displays the Tables screen. For example:

- Reload any changed schemas into Anzo by clicking the menu icon (

) in the Actions column for the schema and selecting Reload Schema. For example:

) in the Actions column for the schema and selecting Reload Schema. For example:

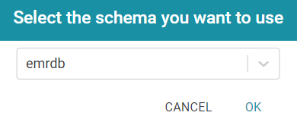

- Click the Ingest button. If the source has more than one schema, Anzo displays the select schema dialog box. In the drop-down list, select the schema to use, and then click OK. For example:

Anzo opens the Ingest dialog box. The options are populated with the values from the previous workflow configuration. For example:

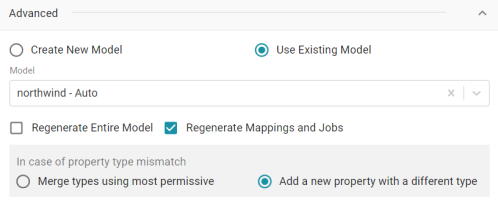

- Click Advanced to view additional configuration options. By default, the Ingest workflow is configured to use the Existing Model, and additional options are presented for controlling the regeneration of artifacts and the handling of property type mismatches. For example:

This list below describes the advanced options:

- Regenerate Entire Model: Selecting this option means that Anzo deletes all entities from the existing model and recreates them. The model that results from the current ingestion process will contain only the data from the current process.

If a previous run generated a model that contains classes A, B, and C, and the current data contains Classes C, D, and E, selecting Regenerate Entire Model results in a model that contains only classes C, D, and E. If Regenerate Entire Model is NOT selected, the resulting model will contain classes A, B, C, D, and E.

- Regenerate Mappings and Jobs: Selecting this option means that Anzo deletes all entities from the existing mappings and jobs and recreates them. The artifacts that result from the current ingestion process will contain only the data from the current process.

If a previous run generated mappings and jobs that contain tables A and B and the current run is ingesting tables C and D, selecting Regenerate Mappings and Jobs results in artifacts that contain only tables C and D. If Regenerate Mappings and Jobs is NOT selected, the resulting artifacts contain tables A, B, C, and D.

- Merge types using most permissive: Anzo looks at the inferred types in both versions of the Schema (the old and new versions) and chooses the type that covers all inputs. In most cases Anzo sets the type to String.

- Add a new property with a different type: If Anzo encounters a type mismatch, it adds a new property with the new type to the existing Model.

When associating column names in the new schema with the existing model, the match is case-insensitive. Anzo matches the names based on the spelling. For example, "myInt" matches "MYint."

- Regenerate Entire Model: Selecting this option means that Anzo deletes all entities from the existing model and recreates them. The model that results from the current ingestion process will contain only the data from the current process.

- Click Save. Anzo updates the pipeline and regenerates or updates the model and mappings according to the options you specified.

- In the main navigation menu under Onboard, click Structured Data. Then click the Pipelines tab.

- Click the name of the pipeline you configured. Anzo displays the Pipeline Overview screen. For example:

- To run all of the jobs, click the Publish All button at the top of the screen. To publish a subset of the jobs, select the checkbox next to each job that you want to run and then click the Publish button above the list of jobs. Anzo runs the pipeline and generates the resulting file-based linked data set in a new subdirectory under the specified Anzo data store.

When the pipeline finishes, this run of the pipeline becomes the Managed Edition. The Managed Edition always contains the latest successfully published data for all of the jobs in the pipeline. For more information about editions, see Managing Dataset Editions. If an existing graphmart contains the resulting dataset, refresh or reload the graphmart to load the modified data. See Working with Graphmarts for information about working with graphmarts.