Dynamic Deployment Architecture and Process Overview

Anzo supports cloud-based dynamic deployments using Amazon Elastic Kubernetes Service (EKS), Google Kubernetes Engine (GKE), and Azure Kubernetes Service (AKS). These managed services enable enterprises to deploy Kubernetes (K8s) based applications on-demand without needing to maintain the K8s control plane. The K8s services interact with other services on the platform to provide

- User role management and authentication.

- Instance provisioning and deprovisioning.

- Isolated networking for deploying and maintaining clusters.

- A container registry for maintaining container images.

- Network load balancing for the hosted applications.

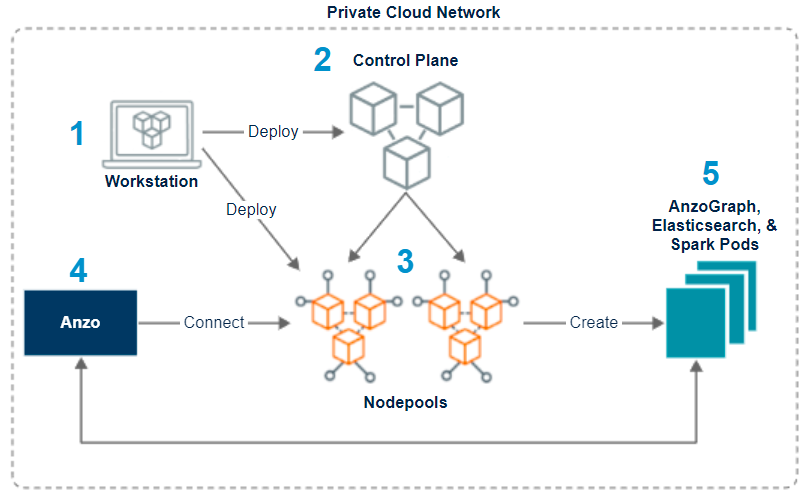

The process for setting up the infrastructure that will enable users to deploy applications on-demand with Anzo is the same on each of the platforms. Provision a K8s cluster on your preferred platform and then register the cluster in Anzo. Cambridge Semantics supplies scripts and configuration files that assist in creating clusters and make it straightforward to customize the K8s worker nodes for hosting a variety of applications and controllers to manage the applications. The diagram below shows a simplified view of the process and architecture.

- First, you configure a workstation to use for creating and managing the K8s infrastructure. The workstation needs to have the required cloud provider software packages as well as the deployment scripts and configuration files supplied by Cambridge Semantics. This workstation will be used to connect to the K8s API endpoint and provision the K8s cluster and its nodepools.

- Next, you deploy the K8s control plane, the master nodes that manage the cluster.

- Then you create any number of nodegroups or nodepools in the K8s cluster. These are the worker instances that are configured according to the requirements of the applications that will be deployed, i.e., an AnzoGraph nodepool, an Elasticsearch nodepool, or a Spark nodepool. The nodepool configuration also includes any restrictions that you want to employ, such as the size and number of instances that can be used for the application and whether the instances remain deployed or are destroyed when the application is not in use.

- Once the K8s infrastructure is in place, you configure a "Cloud Location" in Anzo. The K8s cluster and credentials are registered in Anzo so that Anzo can connect to the nodepools and the configured services become available for users to deploy on-demand.

- Users deploy applications as needed through Anzo. Cambridge Semantics hosts all pods/containers in a public repository. You can choose to deploy pods from that repository or maintain an internal elastic container registry and deploy images from there.